Interprocess communication is an integral part of a distributed system running multiple applications that interact synchronously or asynchronously to function. IPC refers to the different methods used to facilitate this interaction, primarily data exchange between the two, usually called cooperating processes.

Introduction

Independent processes may need to function as small sub-processes for modularity, computational speed-up or conveniece purposes. All such situations for cooperating processes need them to exchange data. Essentially, any process is expected to accept the data, modify it and pass it to another process.

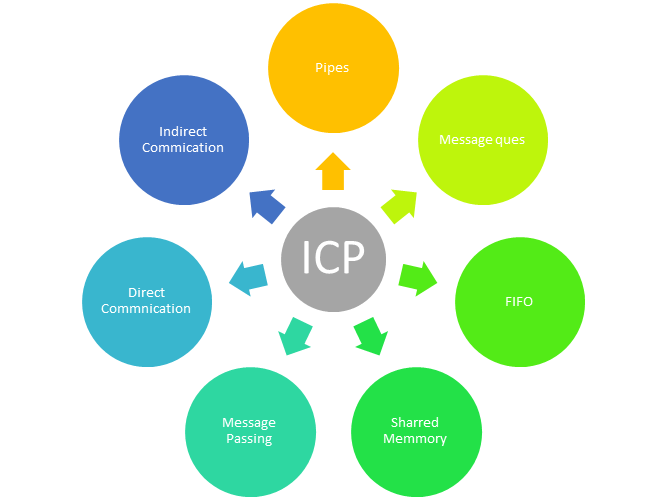

The primary modes of inter-process communication are shared memory based communication and network-based message transfer.

Types of IPC

Shared Memory

Shared memory communication utilizes a reserved memory chunk in the memory that is dedicated for data interaction between the cooperating processes. The proceses can refer to a unique identifier to access that shared memory location to read and write data. Certain implementations of shared memory IPCs ensure data safety by employing locks and avoid deadlocks or race conditions or corrupted memory situations.

Messaging

The broader umbrella of IPC involves using network protocols to interact between applications. The protocols essentially have a data bus that runs on the kernel transporting information from one application to the other.

Shared Memory System for IPC

The established region of shared memory usually resides in the address space of the application/process creating the shared memory and actively writing to it. The process receiving the data usually attaches the shared memory to itself. While the OS and underlying tools restrict two processes to share the same memory space thus avoiding any data corruption problems, two cooperating processes using shared memory IPC need to decalre their mutual agreement for this communication to happen.

As the read and write steps may be executed asynchronously by the two processes and at different frequencies, it is important for shared memory locations to maintain a data buffer. The data buffer allows the write operation to happen on a different chunk of memory while the memory at a different location is read.

The shared memory space can have bounded or unbounded buffers. As the name suggests, an unbounded buffer allows the data generating process to continuously dump data on the shared memory without any limitations while the data reading process could be slow at reading it. The bounded buffer has a fixed size which prohibits the writer to write to shared memory if full. A shared memory chunk maybe cleaned up after either the reader consumes it or it is old information not consumed for a duration beyond a certain threshold.

Messaging systems for IPC

This paradigm of communication is particularly helpful in distributed systems running multiple processes on different machines connected over a network. Messaing system mostly allows bi-directional communication between two processes, making it more useful than a shared memory paradigm. It also supports fixed or variable size data to be transferred.

Fixed data size systems are easier to define message packet size for but make it the data generation step constrained to this size. Variable message sizes are sophisticated for transport but easier for data generation.

Any IPC using messages needs to establish a link between the two cooperaitng processes. Ways to establish this communication link include 1. automatic or explicit buffering, 2. direct or indirect communication or 3. synchronous or ayschronous communication.

Direct Communication

Under the direct communication paradigm, the processes sending or receiving a message should know the name of the second process

send(P, message)- Send message to process Preceive(Q, message)- Receive message from Q

For direct communication, processes only need the name/identifier of the process to send or receive from. A link can exist only between two processes and only one link can exist between two processes. This is a peer to peer system which has symmetry in naming.

Sometimes, under direct communication, the receiving process does not need to name the process it is receiving data from. This allows the receiver to get data from different and multiple processes. The sender still needs to mention the name of the recepient under this asymmetric setup.

Direct communication has limited modularity and prohibits scaling due to the role of hard-coded identifiers.

Indirect Communication

This paradigm avoids using process identities and the data is instead delivered to or received from ports on the machine. A process could send message to a mailbox/port with a unique identification and the receiver could access the same.

send(A, message)- Send message to port/mailbox Areceive(A, message)- Receive message from port/mailbox A

Here, a link can be established between more than two processes. Each pair of communicating processes can have multiple links where link corresponds to one mailbox or port.

The point of interaction, i.e. the mailbox or the port can either be owned by a process or the OS.

Synchronous or Aysnchronous Communications

Aysnchronous communication is non-blocking while sychronous is blocking type. This is applicable on sender as well as receiver. A blocking type stalls the complete process before it completes the communication step, be it sending data or receiving data. While the sender may bock until, the message is delivered, a receiver may block until it has received the complete message.

Buffered or non-buffered Communication

Messages when in transit between the sender and the receiver are maintained in a queue that manages the frequency of incoming and outgoing data.

- Zero capacity queues cannot accumulate any message and thus blocks the sender from sending more data until the previous data has been consumed

- Bounded capacity queues keep accumulating messages till their capacity is full and then go into blocking mode until more space is available

Continiued in the next part